97 Steps from Marketing Promise to Working Reality

The real story of installing NVIDIA Cosmos, Isaac Sim, and Isaac Lab for industrial robotics development

The NVIDIA Cosmos documentation presents a clean narrative: run pip install cosmos-predict2 and you're ready to build world foundation models for robotics. The Isaac Sim guides suggest a straightforward installation process. After 97 debugging steps, library conflicts, container crashes, and GLIBC version battles, I have a different story to tell.

This isn't another sanitized tutorial or marketing demo. This is the actual engineering journey of building the Viking ARC system - integrating AI decision-making with humanoid robotics for industrial applications. What follows is documentation of what really happens when enterprise requirements meet bleeding-edge AI frameworks.

The Challenge: Real Industrial Robotics

I'm Bill Campbell, Director of AR/XR Cobot Development at Lavorro Inc. We develop Lucy Bot, an LLM/ML/AI system for semiconductor manufacturing environments, along with data pipeline solutions for tool integration. My background spans semiconductor tool control software and SECS/GEM protocols - I understand the precision and reliability requirements of industrial automation.

The Viking ARC project combines Lucy Bot's intelligence with physical robot capabilities. The use case requires:

- Real-time decision making in critical situations

- Physics-based simulation for training scenarios

- GPU acceleration for responsive AI inference

- Integration with existing industrial control systems

The Promised Stack

The documentation suggested a straightforward path:

- NVIDIA Cosmos: World foundation models for robotics training

- Isaac Sim: Physics simulation and digital twin development

- Isaac Lab: Reinforcement learning framework

- GPU Acceleration: CUDA support for real-time performance

Marketing materials implied these components would work together seamlessly. Installation guides suggested simple pip commands and container deployments.

What Actually Happened

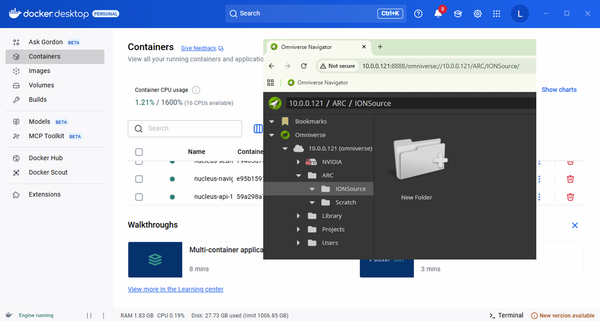

Step 1-15: The Docker Deception

Started with the obvious choice - NVIDIA's official containers. Docker builds failed consistently on flash-attention compilation, consuming system resources and crashing during memory-intensive compilation steps. Multiple container approaches failed with the same result.

Step 16-35: The CUDA Maze

Attempted pip installation revealed library path conflicts. PyTorch detected CUDA, but Cosmos couldn't find cuDNN libraries. Libraries installed in user space weren't visible to system tools. The ldconfig command couldn't locate required NVIDIA runtime libraries.

Step 36-55: Library Path Hell

Created symlinks manually for missing libraries. Set LD_LIBRARY_PATH environment variables. Discovered transformer_engine required GLIBC 2.38, but Ubuntu 22.04 provides 2.35. Each fix revealed new missing dependencies.

Step 56-75: Version Compatibility Wars

Isaac Lab required Python 3.11, but development environment used 3.10. Isaac Sim version compatibility with Isaac Lab created additional constraints. Bleeding-edge AI frameworks don't maintain stable dependency trees.

Step 76-85: System-Wide CUDA Installation

Realized user-space CUDA libraries weren't sufficient. Installed full CUDA toolkit system-wide. Added proper PATH and CUDA_HOME environment variables. This resolved most library detection issues.

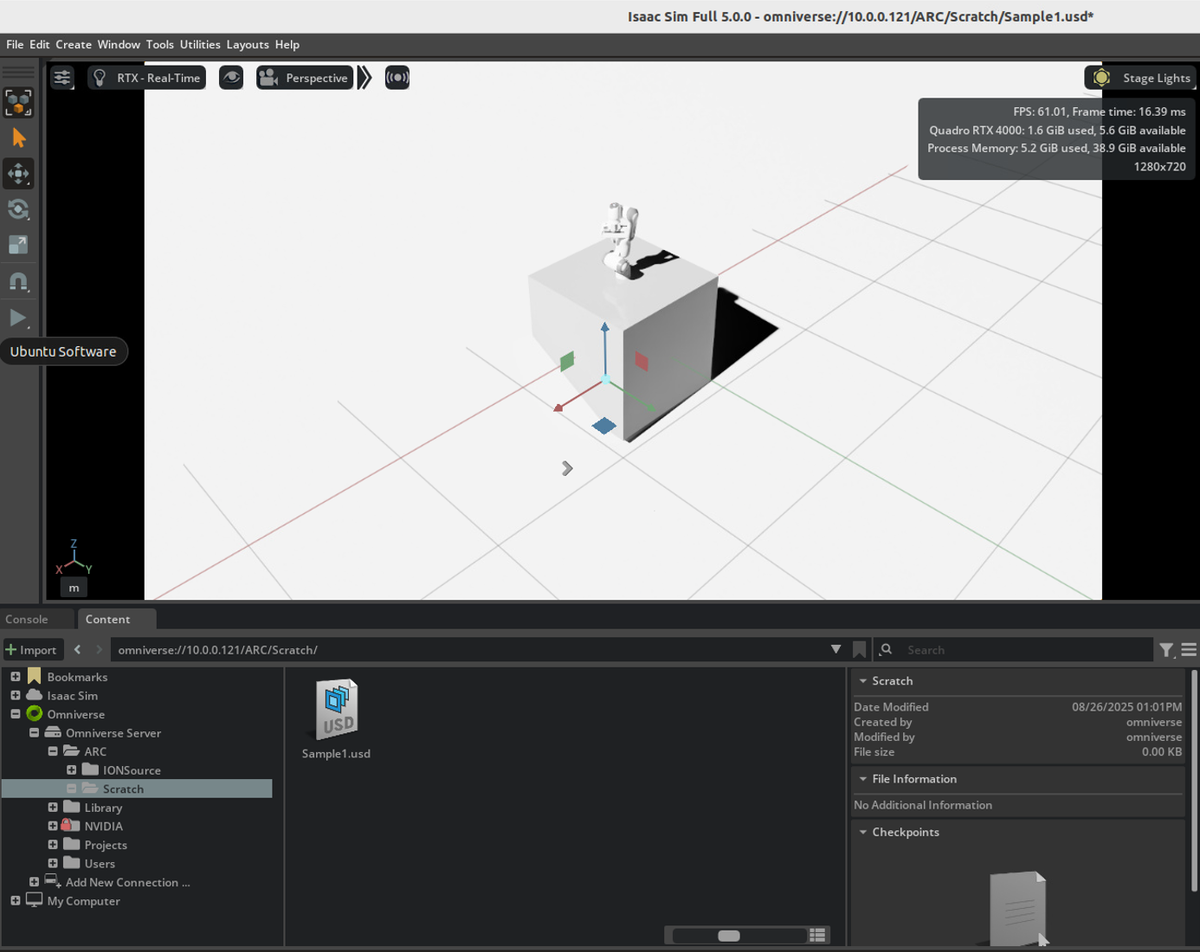

Step 86-97: Integration Victory

Isaac Sim 5.0.0 proved compatible with Isaac Lab requirements. Final configuration achieved working GPU acceleration with Cosmos basic functionality. All major components operational despite some pipeline limitations.

What Actually Works

After 97 steps of debugging, here's the distilled process that produces a working system:

Foundation Setup

- Install NVIDIA drivers and verify GPU detection

- Install system-wide CUDA toolkit (not just user-space libraries)

- Configure proper PATH and CUDA_HOME environment variables

- Install NVIDIA Container Toolkit for future container needs

Core Installation

- Direct pip installation:

pip install cosmos-predict2[cu126] - Isaac Sim 5.0.0 installation via official channels

- Isaac Lab installation:

pip install isaaclab - GPU acceleration verification and library path configuration

Critical Requirements

- Ubuntu 22.04 LTS (tested platform)

- Python 3.10 minimum (3.11 preferred for Isaac Lab)

- System-wide CUDA installation (user-space insufficient)

- Sufficient GPU memory (8GB+ recommended)

Lessons for Your Deployment

Container Reality

Marketing promises of "just run our container" often fail in practice. Container builds for complex AI frameworks frequently crash during compilation. Native installation provides better debugging visibility and control.

Library Management

AI frameworks assume system-wide library installations. User-space pip libraries create path conflicts that are difficult to diagnose. Plan for system-wide CUDA toolkit installation from the beginning.

Version Constraints

Bleeding-edge AI tools have rapidly evolving dependencies. Version compatibility matrices change faster than documentation updates. Expect significant time investment in dependency resolution.

Enterprise Considerations

Industrial deployments require stability over cutting-edge features. Consider using slightly older, more stable versions rather than latest releases. Document exact version combinations that work in your environment.

The Working Reality

After 97 steps, we achieved:

- Functional Cosmos installation with basic capabilities

- Isaac Sim 5.0.0 with URDF import functionality

- Isaac Lab integration for reinforcement learning

- GPU acceleration on Quadro RTX 4000

- Foundation for industrial robotics development

Some advanced pipeline features remain problematic due to library conflicts, but core functionality enables real project development. The gap between marketing promises and enterprise deployment reality is significant, but not insurmountable.

Next Steps in Viking ARC Development

With the foundation established, development continues on:

- PM01 humanoid robot integration (pending URDF availability)

- Digital twin environment creation for industrial scenarios

- Lucy Bot integration for AI decision-making

- Training pipeline development for specific automation tasks

The 97-step installation process provides hard-won expertise that transfers to similar enterprise AI deployments. The real value lies not in following marketing tutorials, but in understanding the practical challenges of production deployment.

Resources

Installation scripts and configuration files used in this deployment are available at the Viking ARC GitHub repository. These represent working configurations, not theoretical procedures.

For organizations facing similar integration challenges, documentation of actual working configurations proves more valuable than marketing materials. The gap between promise and reality remains significant in enterprise AI deployment.

Bill Campbell is Director of AR/XR Cobot Development at Lavorro Inc., specializing in AI/ML systems for semiconductor manufacturing. He combines deep semiconductor industry expertise with hands-on AI robotics development for industrial automation applications.

What's your experience with AI robotics deployment? Share your own installation battles and working solutions in the comments below.